Finding 94123 Solutions to a Math Problem

I have a thoughtful weekend to myself, in the middle of a crazy bit of life.

My blog is now 3 years old. I’ve changed so much in those 3 years, and the amount I feel inspired to write waxes and wanes along with the changes. Today, I want to write. As for what to write about, there are many options. There is always Opus Magnum, where 2024 had far more records than just the main campaign records covered in these posts, and a new tournament is in progress. Or I could write about life, but that’s a story I’m still experiencing and there’s nowhere I could reasonably start and end it. Instead, I will write about math.

Over the course of 2024 I wrote some cool code that fits into a cool story. This code relates to my fascination with the Freudenthal Problem. Thanks to the code, I now know the smallest 94123 solutions, to a problem that is rather intense to find even one solution for. What does one do with them? Is 94123 a lot? Why such a specific number, which happens to also be the zip code of Fort Mason? Let’s dive in. It’s story time.

The Freudenthal Problem

I’ve written twice about this problem before – once for Summer of Math Exposition 2022, and then again in early 2024 with some fresh results. Consider this the third installment of the series. For a very short refresher, a solution to the Freudenthal Problem is any pair of whole numbers both greater than or equal to 3, allowing a very particular dialogue between two perfect mathematicians:

- Both are told that the numbers are 3 or greater, and that Sam knows the sum and Polly knows the product

- Sam tells Polly “you don’t know the two numbers”

- Polly tells Sam “that was true, but now I do know the two numbers”

- Sam responds “now I do too”

Read the previous posts for the full detail, but here are the vibes:

Any pair of numbers that solves the puzzle, must strike a perfect balance. The factorization of the product must be ambiguous, but only barely, so that a single extra piece of information from Sam reveals the answer. Then every alternative pair of numbers with the same sum, must fail to be a solution in some way. As the sums get larger, this last condition becomes so much work to check.

First Foray

When I first heard the problem in 2014, I wrote some bad python code (like any respectable mathematician). We were given a slightly simpler version of the problem, one that had an upper bound on the numbers. My code found the unique solution: (16, 13). This post will largely ignore the question of why it is a solution, see the earlier installment for that. Instead, we tell the story. Since I had a solution using code, I could mess around with the bound.

Increasing the upper bound, I was able to determine that there were multiple solutions! The next one was (16, 73). This got me a little excited, there was some cool collection of solutions to be discovered. Maybe I could get my name on it. But I didn’t feel like I had done anything specifically worthy yet. Anyone could wonder about this problem. And so I looked to find any papers.

I found one: The Freudenthal Problem and its Ramifications, written in 2006 by the team of Axel Born, Cor A.J. Hurkens, and Gerhard Woeginger. My new favorite interesting math problem had at least some admirers in the world. I reached out, but got no reply. I’ve learned that in the time since, Gerhard Woeginger passed away. But their paper gave me some language to use to talk about the problem, and a set of conjectures and results. Before this, I had only known it as “the alien arithmetic problem” since that’s how Prof Benjamin had posed it.

Unified Language

These authors had investigated “Freudenthal(m, M)”, the general name they gave to this problem where the lower bound is m and the upper bound is M. (Actually, they go to some pains to distinguish the cases where the numbers are and aren’t allowed to be equal, I’m going to ignore that entirely for clarity). Accordingly, the code I had written in python was listing off solutions to Freudenthal(3, M) with the goal of M → ∞.

They found what they named “stable solutions” and “phantom solutions”. A stable solution is one where for all sufficiently large M, the solution works. In other words, a stable solution is a solution to Freudenthal(m, ∞). Conversely, a phantom solution only works up to a certain maximum M. Phantom solutions are not solutions to Freudenthal(m, ∞) even though there exists some M where they solve Freudenthal(m, M). In their words:

We have checked all pairs (x, y) with x + y ≤ 50.000 with the help of

a computer program … there are 804 stable solutions and 288 phantom solutions for Freudenthal(3, ∗)

This was a good starting point! I kept obsessing though, and eventually I had much better results of my own.

2016

I do tend to focus on low level optimizations when I write code, and even though I was a young and relatively inexperienced coder, I found plenty. Now in 2016, confident enough to write C code, I monopolized my laptop’s memory, sieved out a bitset of products that were consistent with every statement but the last, and then looped over all sums, looking at specific entries in this bitset to compute a value for each one.

- If the value was 1, the sum had a stable solution (and the program could easily deduce which pair it was).

- If the value was 0, the sum corresponded to a phantom solution. This was a nice consequence of solving the problem in the way I did – I understood phantom solutions now.

- If the value was greater than 1, it was not a solution, but the value could still be associated with that sum as a sort of function: s → F(s).

When I graphed that function, I fell further into the rabbit hole:

Again, the older posts talk in detail about why this is how it is, but in terms of the story I definitely felt like I had stumbled into a unique bit of mathematical beauty here. The 2006 paper never produced a visual like this. I got to name it, and I picked the name Freudenthal’s Comet.

What I was doing was new, and probably meant I was deeper into this problem than anyone ever before.

New Results

My code found the 804 stable solutions with sum less than 50000. But it went ten times further, all the way out to a sum of 532151. If I recall, I wrote it in a way where I had to gracefully terminate the running process before it would tell me how far it got. So this number isn’t really meaningful, it is just where it happened to be when I woke up in the morning and ended the overnight run. What I do know, is that beyond a sum of around 250000, the code became horribly inefficient. It would take weeks of running to make it to a sum of a million, even though it got to the halfway point overnight.

At that point, there are 3141 stable solutions. You might wonder, what can we learn from 3141 solutions that we couldn’t already have learned from 804? Well, lots of the solutions have one of the numbers a power of 4. I now had many solutions with 65536 and 262144 in them. To me, every time a number showed up frequently, the other numbers that went with it became an interesting integer sequence. So I now knew that the sequence for 65536 started 523, 709, 1783, 2113, 2143.. And the sequence for 262144 started 2287, 2797, 3307, 4327, 5347..

That run also found a solution that surprised me, namely the pair (4, 137233). Prior to this, there were no known solutions with a 4 in them. The sequence for 4 hadn’t even been a dream, but now I knew it started with 137233. Did it continue?

I was invested in the story of each of these integer sequences. My favorite sequence was the one for 16, which was still going, now up to 608 different companions.

Deep in Thought

It was never going to be possible to brute force to infinity. I wanted answers to questions that brute force wouldn’t give me. I looked into why the comet had these branches, and was able to form some new conjectures of my own. The branches started to make sense, as part of a fractal that crept slowly away from the 0s and 1s that would ever give solutions. In fact, I believed at the time that there were actually only a finite number of solutions at all. I thought I had found the largest one too, the pair of (2120005897729125501632512, 2261497405430902850470057).

After a couple years of waxing and waning interest, I summarized my thoughts in a post on mathoverflow. As part of that post, I let out a feeble plea:

So, my question to mathoverflow is: Has there been anyone else thinking about this problem, and do they have results that supplement/contradict/dwarf those I have gathered?

Nobody seemed to have anything close. I was the Preeminent Freudenthal Enthusiast. I could live with that.

It also meant that when I was wrong, I had to be the one to catch it.

Wake-up Call

I remember fiddling with this problem again in 2019, using Mathematica this time. My goal was to understand “why” a specific phantom solution had F(s) = 0. This one was strange; others that my method suggested would behave similarly had higher numbers like F(s) = 30. When F(s) = 0, it has always meant something that Terence Tao likes to call a “conspiracy”. Something in math that to the untrained eye looks random, but has a hidden pattern that comes out in just the right way to make number theory interesting again. A random outcome won’t fail 100% of the time given so many attempts, unless it really truly is unable to succeed.

I was on the right track with the idea of a conspiracy. The conspiracy I had already discovered was why it was at all possible to show that the huge solution was a solution. But the missing part was sitting in that one phantom solution. Eventually, Mathematica showed me that for all of the failures that I thought would have a chance to succeed, they failed because of the number 5581.

That number didn’t belong to any of the conspiracies I was aware of. It was something new, and it blew the problem back open.

Fermat and Mersenne factors

The magic numbers in the conspiracy are always factors, greater than 5, of some number that is a power-of-four plus or minus 4. Once the sum passes a given power, these are how it remains possible for Sam to say the last line of the dialogue. The smaller the factor, the more solutions are able to survive. If the factor is too large, solutions become so rare as to perhaps dry up forever.

432 – 4’s smallest appropriate factor is 715827883. However, 432 + 4 is divisible by 5581. A smaller number implies more solutions exist, and now I knew how to find them.

Finding these factors is closely related to finding factors of Fermat and Mersenne numbers. The connection is explored in a lot more detail in the earlier posts, particularly the second post. While spending my time grappling with the ramifications of these factors, my perspective on the problem kept updating.

I had seen the 2006 paper conjecture there were infinitely many based only on seeing the first 804. I had begun to believe that the upper limit was a few dozen digits, then over a thousand digits, and then a combination of Fermat factors seemed to indicate the conjecture was right after all.

But my love for the integer sequences meant that discovering an infinite branch of solutions wasn’t enough. You see, these infinitely many solutions had a very particular form to them. That form didn’t ever include a power of two. I wouldn’t rest until I had a good understanding of what happened to 16.

Back to Brute Force

It was 2024, and the last time I had run a brute forcer for the problem I hadn’t been aware of any of the conspiracies. There were so many new optimizations I could make. With a more powerful computer, much more experience writing code, and a new shortcut I could make using the conspiracies, I had a very powerful new program.

Hintskip

Previously, the expensive loop of the program would look at a given sum, say 1000017, and ask a specific question about every product you could make from its summands. 3 × 1000014, 4 × 1000013, 5 × 1000012, etc. This loop ran until either F(s) > 1, so it could exit, or until it had examined every single product. For solutions (stable or phantom), that meant it needed to examine every single product, a very slow operation.

I implemented what I called “hintskip.” Before running the loop, the hintskip routine would check the sum for conspiracies. If it found any, it would provide hints to the loop control that skipped any iteration that were guaranteed not to increase F(s). Ultimately the loop would only run one in 4k iterations, where k vaguely measures the strength of the conspiracy. If k = 5, which was true of many of the solutions the old program had generated, that’s a factor of 1024 speedup.

Now, when there weren’t conspiracies, F(s) was always much greater than 1, so the loop would still exit early. But when F(s) ≤ 1, that was precisely because of conspiracies. The bigger the solutions, the stronger the conspiracies needed to be. For that reason, hintskip made a dramatic difference. We would consistently end up in either of two cases but both cases were good!

If I was wrong about the landscape of the problem, then I would be missing a conspiracy. I was prepared for this, given what I had been through. But, gloriously, this new program was fast. The speed was yet another encouraging sign. I wasn’t missing something major.

Letting it run

I was a little kinder to the user than back in 2016, and so my program would now stream results to a file. I didn’t have to end the program to start to look at data. Because of this, I just let it run and run. Every half hour it would crank through a million or so sums, barely slowing down as it climbed.

I had to choose to stop it eventually, but I pushed it for a couple weeks. I decided that the stopping point would be 229 as a sum. Getting to 230 might be a rounder milestone, also passing a billion, but that doubled the expected time I would spend running the program.

So when I stopped, I had all solutions up to 536870912. This was another thousand times higher than in 2016, which was already ten times higher than the 2006 paper.

In my streamed results file were a whopping 94123 solutions. What to do with them?

Data visualization

What I really wanted was a way to take some of the crazy predictions I had made about the long term behavior, and at least verify that these predictions weren’t horribly off-base when applied to solutions that came out of the brute forcer. I wanted a graph that showed real and predicted trends and compared them. What would such a graph look like?

Because features tend to happen when the exponent passes a new power of four, I chose a logarithmic plot. The base of the logarithm was 4, so I decided to refer to the unit on the axes as “4ders of magnitude”.

My predictions never were able to say exactly where the solutions were, only how many there would be in a certain interval. So that’s what was on the y axis. In each (logarithmically scaled) bucket, how many solutions did I think there would be, and how many were there? The prediction is in orange, and the real data is in blue:

That’s exciting! We predicted lumps, and we see lumps! Our old data only made it to sums around 49.5, while the new gets to 414.5. The new brute force data lets us see that the predicted lumps almost line up!

Why are they there in the first place?

Labels and Conspiracies

Recall from the hintskip section, you can calculate the “strength” of a conspiracy directly from the sum. Using this calculation, we can label every solution by its strength. These labels can be prime numbers, powers of two, or (in theory) infinity. The smallest solution with label infinity has sum at least 432 so all of our brute forced solutions are labeled by either primes or powers of two.

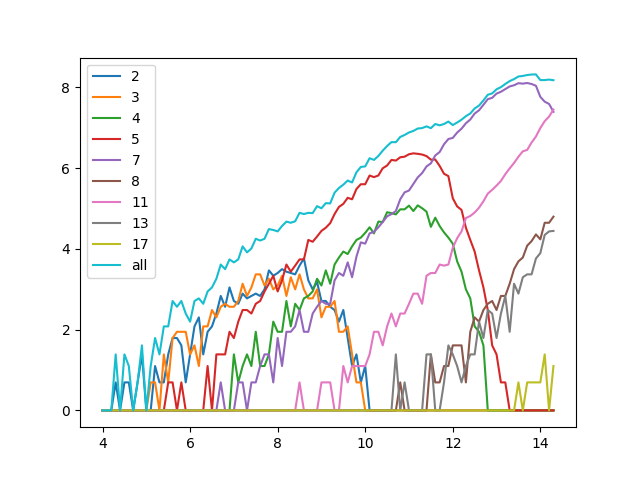

So if we filter down our 94123 solutions by label, we find the following:

Quite the distribution. But remember, we stopped at a pretty arbitrary place. Many larger solutions could exist with these labels. Another buckets graph could help make more sense:

In order to see the rise and fall of the smaller labels, I moved to a log-log plot where both axes are now 4ders of magnitude. But with this scale, close to the bottom of the graph, a difference of one or two solutions makes a big jagged spike. It’s just something to live with. The important feature is how the labels each come with a distinctive hump.

A conspiracy with strength of k = 3 is not strong enough to give you solutions once the sum gets larger than around 410, so the orange hump nosedives back into the axis. The conspiracy with strength k = 5 stops being strong enough at around 413. At the point where we stopped, strength 7 has started to turn around but is definitely still able to make new solutions.

This is where the lumps come from – each label has its own trajectory. The landscape of the problem as a whole is the aggregate of all of the labels. Add humps, get lumps.

Implications

With our 94123 solutions, we have almost certainly seen the largest solution with label 2, 3, 4, or 5.

- the largest solution with label 2 is 123568, 1489753

- the largest solution with label 3 is 283648, 1715821

- the largest solution with label 4 is 32313088, 68111773

- the largest solution with label 5 is 67929088, 28058311

For a handful of reasons, the humps for power-of-two labels behave differently from the humps for prime labels. One of those reasons is that the pair (16, x) gets to exist in any prime label hump, but cannot exist in a power-of-two label hump, so the power of two humps start significantly later (8 and 13 are starting in near unison above). Another is that due to a particular property of factors mod 5, they end more like a hump of label k + 0.79. A modest shift but enough to make 2 and 3 sit close, and 4 and 5 sit close.

As for when the humps end, it’s something like k + 0.1ln(k)4. It’s close enough to k, even if for the early humps it looks like more than twice k.

When a hump ends, the kinds of solutions that can only exist within that hump end with it. My beloved integer sequences live and die with the humps. The sequence of values that can be paired with 64 is contained entirely within the hump with label 2. Accordingly, I know all 175 terms. The largest is 999217. The sequence paired with 1024 ends at 56340967 after 692 terms. The sequence paired with 4096 ends at 66975373 after 2526 terms.

However, there’s some complication if the exponent is itself a power of two.

4, 16, 256, 65536..

These numbers show up in lots of humps, and the rules governing them are more complicated than I want to get into. Suffice to say, we haven’t seen the end of them yet. Notably though, 16 could show up in any hump so long as the label on that hump is a prime. This is why 16 continues on strong.

Predictions vs Data

By comparison to our predictions, the data is a great match.

And unlike the data that would take weeks to even reach 15, it is very simple to extend the prediction:

Into the Crazy Part

Beyond 432, the prediction is still simple to make, it just looks very very strange.

We have Humps, Towers, and a Ramp. The humps are prime labels, the towers are power of two labels. The ramp is the collection of solutions with label infinity, which absolutely becomes the dominant source of solutions once the sum grows.

The fact that the y axis is logarithmic means that the scale here is very dramatically different between the primes and the rest. But the primes are where all of the interesting even number sequences live, so I’m actually happy to ignore the towers and the ramp. We can zoom out even further, letting the towers and ramp disappear far above the top edge of the graph:

This is the interesting story, at least to me.

If my predictions are correct (which I hope is the case, given the data has been very nice corroboration so far).. the prime humps disappear. Then they reappear. Then they disappear again.

If that second disappearance is permanent, then it marks the end of the sequence for 16. It sure looks to be permanent – there are no new reappearances prior to k = 50000, and at k = 51217 the smallest known factors are absurdly large, and not prime (meaning we just haven’t successfully factored the number yet – not for lack of trying! If in the future, the result looks better than the product of four 15418 digit composite cofactors, thank you humanity!)

I will put on record that my favorite number is the last number to appear alongside 16 in a solution. I would love to someday know my favorite number. It ends a sequence I once dreamed of having my name on. Having it span some 1035 entries and end with a 5400 digit monstrosity is the perfect encapsulation of my mathematical essence.

The Hunt

I don’t think it is computationally feasible to find even a single solution belonging to the collection of humps between k = 7500 and k = 10000. I expect there are some 457 needles, but the haystack is of size over 49000. There are a lot of 4ders of magnitude to search.

I was able to use conspiracies to find very large solutions in the past, why won’t those work here? Well, in the past I knew precisely which factors to use, and knew that those factors would work. Here, using only the smallest factors available for every layer out to the required k, isn’t good enough. Instead, the prediction relies on natural density.

To explain, one in every 5581 numbers is divisible by 5581. That much is simple. But what proportion of numbers have a conspiracy with 432? A good fraction of the numbers that have that conspiracy have it because of 5581. But some of them have a conspiracy using other factors: 8681, 49477, 384773, 715827883, or even 2147483647. The result is something closer to one in 3153.

That difference between using the most obvious factor (5581) and using the effective density (3153) is why my second blog post on this problem didn’t find that secondary island around 9000. But for precisely that reason, I think that the hunt is far harder in the secondary island.

8819.c

I did make a program that attempted to find a solution with k = 8819. I figured it was worth a shot. What it did was start with the smallest factors, then perform a subset of the known factor replacements. With larger factors, there was still a chance that the smallest individual number having all of the right congruences came out smaller. The best I found was:

- m = mp * 960067293696380777152397 / 21632581243919

- f = fp * 835593830741749 / 6870529823845046766982513

Where mp is the product of all the smallest factors of (16p – 1)/15 for prime p < 8819 if those factors divide 4p – 1, and fp is the product of all the smallest factors of (16p – 1)/15 for prime p < 8819 if those factors divide 4p + 1. mp and fp are large large numbers, it would take more space than this heading of the blog post to write them. They are hardcoded multiprecision ints in the code.

After making these replacements, the code uses modular inversion to find the smallest integer s such that s + 4 is divisible by f, and s – 4 is divisible by m. When we get lucky, the result is far smaller than f × m.

The factor replacements needed to close a gap of roughly 130 4ders of magnitude. These replacements managed to cut a mere 15 4ders of magnitude. From combinatorics, it seems the magic factor replacements would take longer than the lifetime of the universe to fall out. Maybe in a quantum computing future, but not here, not now.

I have refrained from posting the source anywhere. It will only waste CPU cycles to continue to try before better mathematics are understood. I will wait to know my favorite number.

Conclusion

After all these years, I still love the problem. It has guided me through improving my understanding of math, code, curiosity and limitations. In 2024 I found 94123 solutions using a brute forcer that was cleverer than I could have dreamed of in 2016. I have made visuals of the landscape of a problem that only grows clearer with time. What’s more, I know that my predictions and data agree remarkably well!

I have probably made mistakes that won’t be caught for another couple years, if ever. The result is beautiful nonetheless. I hope to have this landmark viewed by others as they grow curious about something in their own lives.

Thank you for reading.